Analysis

The NAIRR Report: A Vision for Democratizing AI Research and Innovation

Ellie Sakhaee, PhD, MBA, EqualAI Fellow & Jim Wiley, Legal and Research Director, EqualAI

Mar 14, 2023

Recent advances in AI, such as the powerful Large Language Models (LLMs) overtaking the headlines, are enhancing our efficiency and capturing our imaginations, but they are highly resource intensive to train. Due to the large computational lift required for their development, only a handful of well-resourced companies and academic institutions can access and train these models and as a result, there are few companies able to compete and innovate in this space.

To democratize the AI innovation ecosystem in the United States and to accelerate AI research and development (R&D), Congress called on the National Science Foundation (NSF), in coordination with the White House Office of Science and Technology Policy (OSTP), to form a National AI Research Resource (NAIRR) Task Force, under the 2020 National AI Initiative Act. The Task Force’s mission was to explore the “feasibility and advisability” of developing the NAIRR as a national AI research infrastructure, and to propose a roadmap for how it should be established and sustained. Congress directed that the Director of OSTP and the Director of NSF, or their designees, serve as the co-chairpersons of the Task Force.The Task Force was comprised of 12 technical experts and met over 18 months to fulfill their mandate. In addition to Request for Public Comments, the co-chairs hosted a public listening session on June 23, 2022, as an additional means for gathering public input. The Task Force released its final report on January 24, 2023, that presents an implementation plan for a national cyberinfrastructure (the NAIRR) aiming at broadening the range of researchers involved in AI, and growing and diversifying approaches to, and applications of, AI for societal and economic benefits. As envisioned in the report, the NAIRR would transform the AI research landscape and empower researchers to address societal-level problems by enhancing and democratizing participation in foundational, use-inspired, and translational AI R&D in the United States.

The NAIRR Task Force report

The NAIRR Task Force report (the “Report”) lays out a detailed roadmap for a shared research infrastructure (which would be referred to as “the NAIRR”) that would provide AI researchers and students with significantly expanded access to computational resources, high-quality data, educational tools, and user support. The report sets four goals to achieve the NAIRR objective (See Fig. 1): (1) spur innovation; (2) increase diversity of talent; (3) improve capacity; and, (4) advance trustworthy AI. Of note, the addition of “trustworthy AI” as one of the four goals indicates the increased attention that the AI safety and trustworthiness has garnered at the research stage.

Fig. 1. The four measurable goals of the NAIRR. [Image Source: The NAIRR report]

Fig. 1. The four measurable goals of the NAIRR. [Image Source: The NAIRR report]

The roadmap envisioned in the report is designed to meet the national need for increased access to the state-of-the-art resources that fuel AI innovation. It builds on existing Federal investments; designs in protections for privacy, civil rights, and civil liberties; and promotes diversity and equitable access to AI infrastructure. The roadmap provides a detailed description of the approach to establish the NAIRR, its organization, management, and governance structure, proposing “a cooperative stewardship model.” Under this model, one agency would serve as the “administrative home” for the NAIRR, supported by a multi-agency Steering Committee comprised of principals from Federal agencies with equities in AI research. The Steering Committee would provide collective oversight, and three advisory bodies—Science, Technology, and Ethics—would provide strategic management advice to inform the NAIRR’s operations. Operations and governance are described in detail in the report. The proposed infrastructure of the NAIRR is provided in detail in the report. The Report recommends that the NAIRR offer a “federated mix of computational and data resources, testbeds, software, testing tools, and user support services via an integrated portal”, from a variety of providers. The report recommends that the NAIRR must be broadly accessible to a range of users and offer a platform for education and community-building activities to lower the entry barriers and increase the diversity of AI researchers. The report also envisions a NAIRR portal and website to provide catalogs and discovery tools that would help users access data, testbeds, and educational and training resources for different levels of experience. If successful, the NAIRR would have a significant and far-reaching impact, enabling researchers to tackle problems that range from routine tasks to global challenges.

Trustworthy AI as one of the four main goals of the NAIRR

It is noteworthy that trustworthy AI is one of the four pillars of the NAIRR. The report approaches the notion of trustworthy AI from two perspectives: (1) developing AI models and using data responsibly (i.e., trustworthy research); and (2) building novel tools to enhance trustworthy AI across the field (i.e., research on trustworthiness).

According to the report, “[t]he NAIRR should serve as an exemplar for how transparent and responsible AI R&D can be performed,” and proactively address privacy, civil rights, and civil liberties issues by integrating appropriate technical controls, policies, and governance mechanisms from its outset. Establishing an Ethics Advisory Board to advise on issues of ethics, fairness, bias, accessibility, and AI risks and blind spots would be a strong step toward building trustworthy AI.

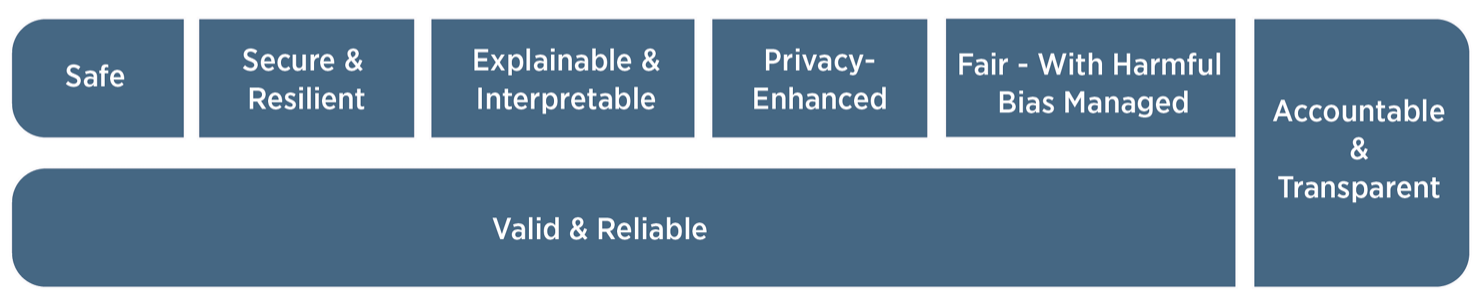

The report recommends that the NAIRR decision-makers (including the Steering Committee, Program Management Office, and Operating Entity) draw from the expectations for automated systems described in the White House Blueprint for an AI Bill of Rights as well as best practices defined in the NIST (National Institute of Standards and Technology) AI Risk Management Framework (AI RMF). The Blueprint for an AI Bill of Rights was released by the White House in 2022 and outlines five principles to help guide the design, implementation, and deployment of automated systems to protect the rights of the American public. The AI RMF provides organizations and AI developers with practical guidelines and best practices to incorporate AI trustworthiness characteristics (see Fig. 2) into the AI products, services, and systems. According to the report, when implementing system safeguards, NAIRR should leverage NIST AI RMF guidelines and the Five Safes framework: safe projects, safe people, safe settings, safe data, and safe outputs.

Fig. 2. Characteristics of trustworthy AI systems as described in NIST’s AI Risk Management Framework [Image Source: NIST AI RMF]

Fig. 2. Characteristics of trustworthy AI systems as described in NIST’s AI Risk Management Framework [Image Source: NIST AI RMF]

How can EqualAI help?

The Report recommends the entity operating the NAIRR, with the support of the Ethics Advisory Board, develop criteria and mechanisms for evaluating research and resource proposals from a privacy, civil rights, and civil liberties perspective.

We recently released an EqualAI AI Impact Assessment (AIA) tool that incorporates key elements from the NIST AI RMF, the accompanying Playbook, and NIST Special Publication 1270 (“Towards a Standard for Identifying and Managing Bias in Artificial Intelligence”). The EqualAI AIA tool aims to help organizations evaluate their AI systems throughout the AI lifecycle and provides an example of how entities can incorporate the NIST AI RMF and adapt it to their needs. EqualAI is piloting this AIA tool with partner companies to further refine and personalize the tool for leaders in responsible AI. Interested companies should reach out for our support if this would be of interest to leadership.

The AIA can also serve as a resource for academic institutions to evaluate their research proposals for NAIRR from a bias and AI risk perspective. This review would help ensure alignment with NAIRR’s trustworthy AI goals. EqualAI also offers programs and membership for companies and organizations who want to lead on and promote responsible AI governance. Reach out for further ideas or to work with EqualAI.

Together we can create #EqualAI

As AI goes mainstream, help us remove unconscious biases and create it equally.