Analysis

NIST will cultivate trust in AI by developing a framework for AI risk management (AI RMF)

Ellie Sakhaee, PhD, MBA, EqualAI Fellow

Aug 10, 2022

Image by DALL-E mini, generated for “AI Risk Management Framework”

Despite their astonishing capabilities, today’s AI systems come with various societal risks, such as discriminatory outputs and privacy violations. Minimizing such risks can, therefore, lead to AI systems that are better aligned with societal values, hence, more trustworthy. Directed by Congress, NIST has taken important steps to establish a framework for managing risks associated with AI systems through creating a process to identify, measure, and minimize risks.

More than 167 guidelines and sets of principles have been developed for trustworthy, responsible AI. They generally lay out high level principles. The NIST framework, however is unique from many others because it aims to translate principles “into technical requirements that can be used by designers, developers, and evaluators to test the systems for trustworthy AI,” Elham Tabassi, the Chief of Staff at the Information Technology Laboratory (ITL) at NIST, said on the In AI we Trust? podcast with EqualAI and the World Economic Forum.

When complete early in 2023, the AI Risk Management Framework (RMF) will be a three-part voluntary guidance that outlines a process for addressing risks throughout the AI lifecycle. Part I of the RMF, Motivation, establishes the context for the AI risk management process and provides a taxonomy of AI risk characteristics. Part II, Core and Profiles, offers guidance and actions to manage AI risks. Part III, Practice Guide, currently under development, will include additional examples and practices that can assist in using the AI RMF.

Aspects of governance will not be addressed in this framework. Rather, NIST has made the strategic, and well-founded, decision to defer discussion of tangential risks that are already handled in other frameworks, such as software security, privacy, safety and infrastructure, by deferring to guidance already available.

Part I of the framework provides a taxonomy of risks

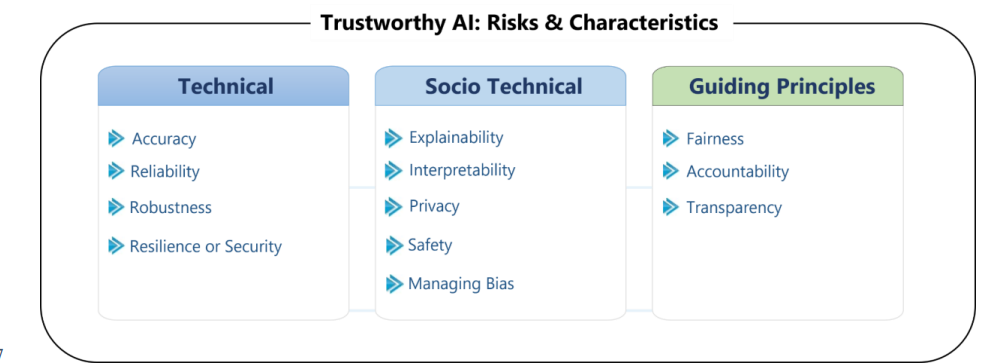

The framework defines risk as “a measure of the extent to which an entity is negatively influenced by a potential circumstance or event.” It then identifies the risks that need to be minimized to achieve trustworthy AI and categorizes them into a three-class taxonomy (Figure 1):

- Technical characteristics: “factors that are under the direct control of AI system designers and developers.”

- Socio-technical characteristics: “how AI systems are used or perceived in individual, group, or social contexts.”

- Guiding principles: broader and qualitative “norms and values that indicate societal priorities.”

Fig. 1. The NIST AI RMF outlines a three-class taxonomy for risks and characteristics of trustworthy AI. Each characteristic is then defined and several examples are provided (See Section 5 of the draft AI RMF for details)

Part II describes functions and activities for AI risk management

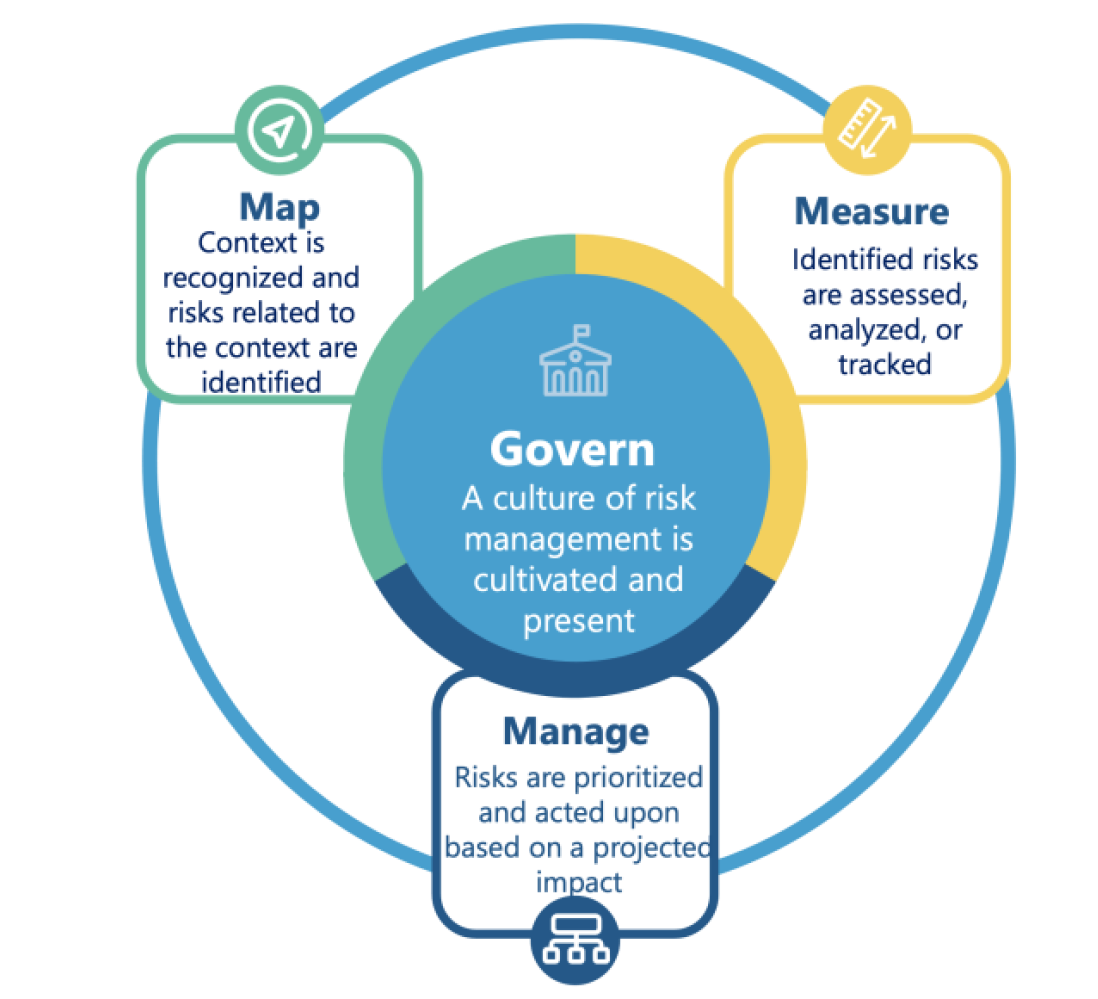

The “Core” section organizes the actions needed for AI risk management into four functions: map, measure, manage, and govern (See Figure 2 for description of each function). Categories and subcategories further subdivide each function into specific outcomes and actions. For example, categories within “map” break the function into establishing context, defining the system’s role and task(s), understanding its capabilities, and identifying its risks and harms.

Figure 2, AI RMF Core provides four functions to carry out AI risk management. Within each function, categories and subcategories specify risk management activities and outcomes. (See Sections 6.1 to 6.4 of the draft AI RMF)

The “Profiles” section is not included in the current draft; when complete, it will highlight case studies of managing AI risk in specific contexts.

The framework emphasizes that risk management should be performed throughout the entire AI system life cycle (i.e., pre-design, design & development, test & evaluation, deployment, post-deployment, and decommissioning) to ensure it is continuous and timely.

Public comments were solicited to help strengthen the framework

Public comments were solicited as one of the ways to invite public engagement, in addition to public workshops. The comments on the first draft were far ranging. Some argue that categorizing attributes of trustworthy AI (Figure 1) strictly into technical and socio-technical categories overlooks the fact that many attributes have both technical and human components. For example, while “security” is categorized as technical, it also depends on deployment decisions and user behavior. Another comment argues that “guiding principles” should not be a class in parallel to the other two, but rather should serve as a foundation for all other attributes. In response to Part II, a public comment posits that a design-development-deploy core (such as the one presented in BSA’s Framework to Build Trust in AI) is more aligned with real-world AI systems, compared to a function-based approach.

Several public comments offer different definitions for attributes presented in the taxonomy. This lack of consensus on basic definitions and uncertainty on the future roles and uses for AI highlights a challenge in establishing an AI framework given that the technology is not fully developed and its applications are still being identified and deployed. As Ms. Tabassi explains: “you often develop standards when there is a sound foundation for the technology. In the case of AI, we want to develop standards while research is advancing.”

AI standard setting is admittedly a “delicate act,” Ms. Tabassi explains, “premature efforts can result in standards that will not reflect the state of the affairs in technology, and can impede innovation and at the same time, developing standards too late cannot gain market acceptance.” Despite the challenges, NIST AI RMF is a timely and critical apparatus to help organizations and the general public levelset on potential risks in our AI development and use and reduce potential harms before they transpire. NIST’s framework will help establish a process to identify, understand, assess and then manage risks with the goal of helping to ensure we build and support trustworthy, inclusive, more effective and thus: responsible AI.

NIST’s “secret sauce” is public engagement

This framework is intended for a diverse audience: AI system stakeholders (e.g., development and business teams), operators and evaluators (e.g., researchers and auditors), and external stakeholders (e.g., trade groups and civil society), as well as the general public. Ms. Tabassi realizes that this process needs to mirror the best practice they support as part of the path toward responsible AI, which includes a diverse, wide ranging, multi-stakeholder approach. She concludes that broad and continued public engagement is the “secret sauce” of NIST’s success in creation of effective frameworks, and invites everyone to participate in the development of the AI RMF. In addition to periods of public comment, there have been multiple workshops with a diverse array of leaders and perspectives that are open to the public, include public questions and comments, and are posted online. In short, NIST “wants to make sure that people who are involved in the discussions are a real representative of the diversity in America.” The second draft of AI RMF will be released for public comments in the Summer/Fall of 2022. You can sign up here to stay informed about NIST’s AI activities.

Additional resources on AI risk management

Towards a Standard for Identifying and Managing Bias in Artificial Intelligence, NIST

Business Roundtable Policy Recommendations for Responsible Artificial Intelligence, Business Roundtable

Together we can create #EqualAI

As AI goes mainstream, help us remove unconscious biases and create it equally.